Path planning

Deciding which short paths to take that will help it complete a long range-path is the step-by-step process used by ADVs. This is known as path planning. The simple aim of path planning is getting the vehicle to its destination. The actual sensors and methods used by different manufacturers will vary a little, but the algorithm used for path planning will be something like the following description.

The vehicle needs to determine an approximate long-range path, which is made up of constantly changing short range paths. These short-range paths (turn left, speed up, change lanes etc.) are what the vehicle is capable of achieving under the current operating conditions. Any short-range paths that involve coming too close to an obstacle, entering the path of an oncoming vehicle without enough time to complete the manoeuvre, and similar paths, are rejected as options. Another example is a vehicle travelling at 90kph (25m/s), would not be able to take the path with a sharp left turn in 25m, but it would be able to take a shallow curve in the road, without changing speed, if it was clear for a suitable distance ahead.

The remaining feasible paths are evaluated and once the best path is determined, a set of throttle, brake and steering commands are sent to the appropriate system controllers and actuators.

The time required for this ‘decision’ varies depending on the systems architecture and processor speed but is in the order of 50 milliseconds (a twentieth of a second). This whole process is repeated many times until the car reaches its destination. To add a context here, at 90 kph (25m/s) a car would travel about 1.25m, and the short-range path planning process would repeat. However, this is arguably much faster than a human could react.

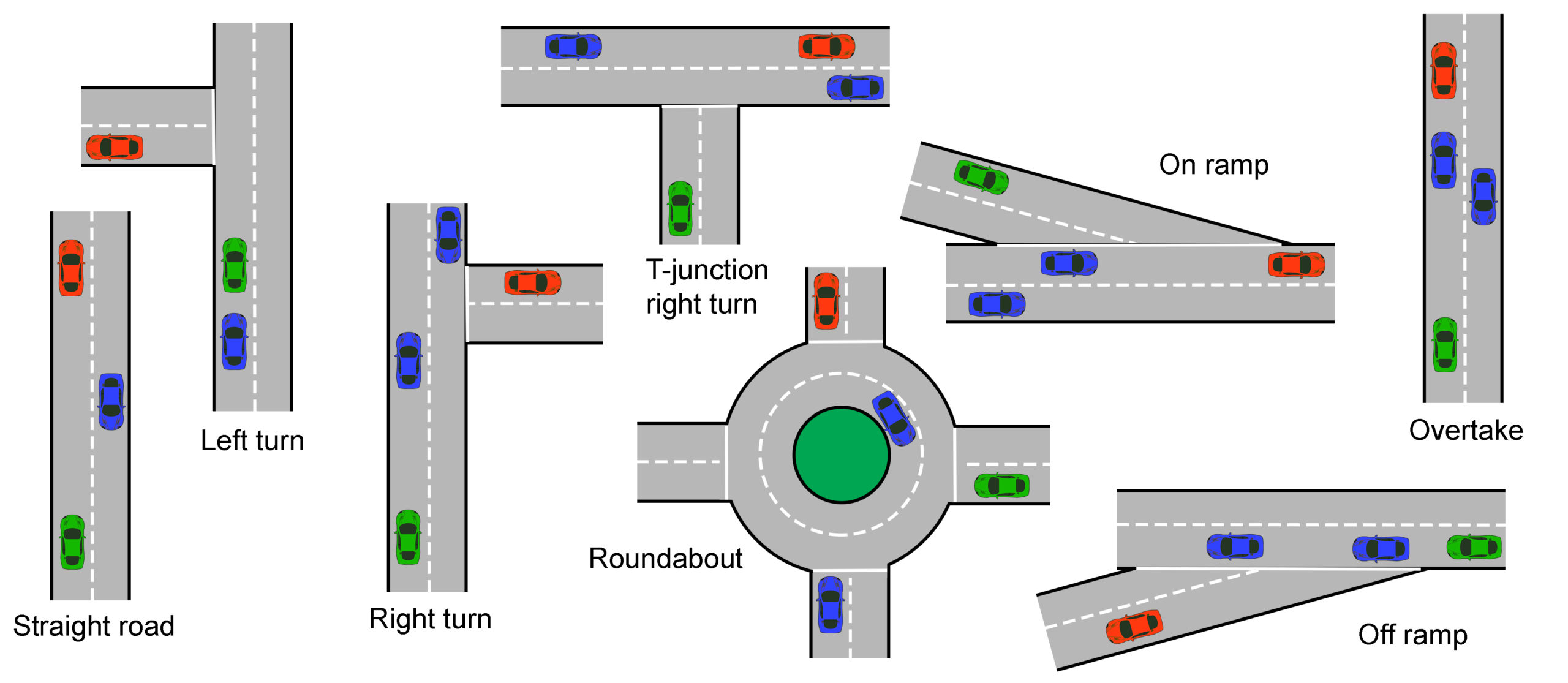

There is a range of actions that a vehicle may need to carry out. Some of these are represented (driving on the left) below. In each case the green car is the current position and the red car represents the position after the chosen action has completed. The blue car, or cars, represents typical traffic hazards. In reality, there will be even more complexity, for example pedestrians, parked cars, road works and more.

Figure 8 Path planning strategy and evolution

Collision avoidance

Collision avoidance can also become collision mitigation, in other words the least-worst crash scenario is selected. Many ADAS vehicles already have collision avoidance but they only warn the driver and pre-charge or possibly apply the brakes, they do not mitigate.

Front-facing sensors such as cameras, radar or lidar are used and often form part of an active cruise control (ACC) system. This is SAE automated driving level 1.

Higher level systems need to decide if a collision is going to occur, and if corrective or evasive action is not possible, the type of crash must be chosen. This is arguably one of the most contentious areas of self-driving technology. What does a car decide if the options are either a head on high speed crash that will kill its occupants, to run over a pedestrian, or crash into a static object?

Other Situations

No matter how much planning and preparation goes into a fully autonomous vehicle (level 5), there will always be situations that are unique. The vehicle will need to be able to deal with them or re-route around them.

Pedestrians are one of the most unpredictable things we meet when driving. Even a simple situation like a pedestrian waiting to cross the road, when not at a designated crossing place, can be a challenge. For example, a driver may wave to the pedestrian or flash their lights to say they are going to stop or slow down. Alternatively, a pedestrian may stumble by the side for the road and could look like they are about to cross.

Figure 9 Narrow road

I live near the countryside in the UK and there are lots of narrow roads with passing places or sometimes both cars have to pass slowly with the nearside wheels off the road. If I meet a car coming the other way I, or the oncoming car would move over, or in some extreme cases, one of us would have to reverse to a passing place. If two autonomous cars meet on this type of narrow road, who has to reverse? Will there be a hierarchy where the newer car gets priority? Or maybe the cars will have communicated to prevent the situation occurring in the first place.

Automated driving system

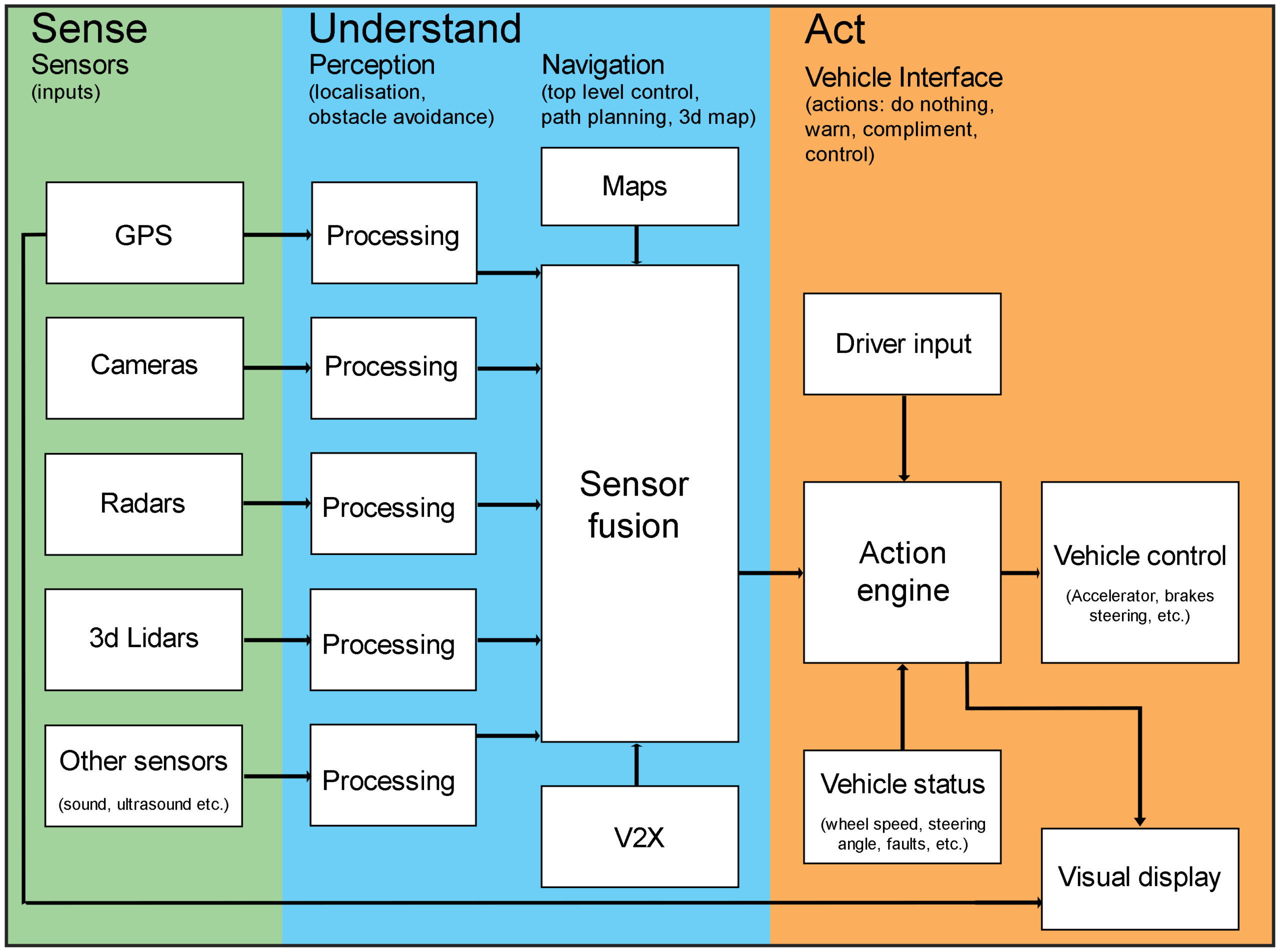

Like all complex things, it is helpful to consider an automated driving system as a block diagram. Using the standard input, control, output method is useful:

Automated driving system

The ‘Sense’ block above, is a range of sensors, which in this case are:

- GPS receiver to give navigation positional and a compass for direction of travel

- Cameras to see the general surroundings (stereo cameras perceive depth)

- Radar to build a picture of the longer range surroundings even in poor light or bad weather

- Lidar to build up a 3d image of the surroundings

- Other sensors appropriate to the particular design.

Information from these sensors is processed individually and then combined or fused in the ‘Understand’ block. Maps come from stored data just like on a normal navigation system, except the need for regular updates is very important. V2X is shorthand for vehicle to everything. Updates can be downloaded from the cloud, and information from other connected cars can be processed.

The ‘Act’ block on the right hand side of the diagram is where the vehicle is finally controlled – or driven. The action engine combines information from sensor fusion with driver input and live vehicle status such as speed or steering angle. It then outputs to a visual display and the actual vehicle control actuators, the key outputs being the:

- Accelerator

- Brakes

The level of driver input will depend on the level of automation.

Summary

In order for the computing system of an ADV to plan the next path, it needs detailed and up to date information, and has to react very quickly. The information comes from sensors on and around the vehicle. More details about sensing in the next article.